A Survey on Non-photorealistic Rendering Approaches for Point Cloud Visualization

Ole Wegen -

Willy Scheibel -

Matthias Trapp -

Rico Richter -

Jürgen Döllner -

DOI: 10.1109/TVCG.2024.3402610

Room: Bayshore II

2024-10-17T14:39:00ZGMT-0600Change your timezone on the schedule page

2024-10-17T14:39:00Z

Fast forward

Full Video

Keywords

Point clouds, survey, non-photorealistic rendering

Abstract

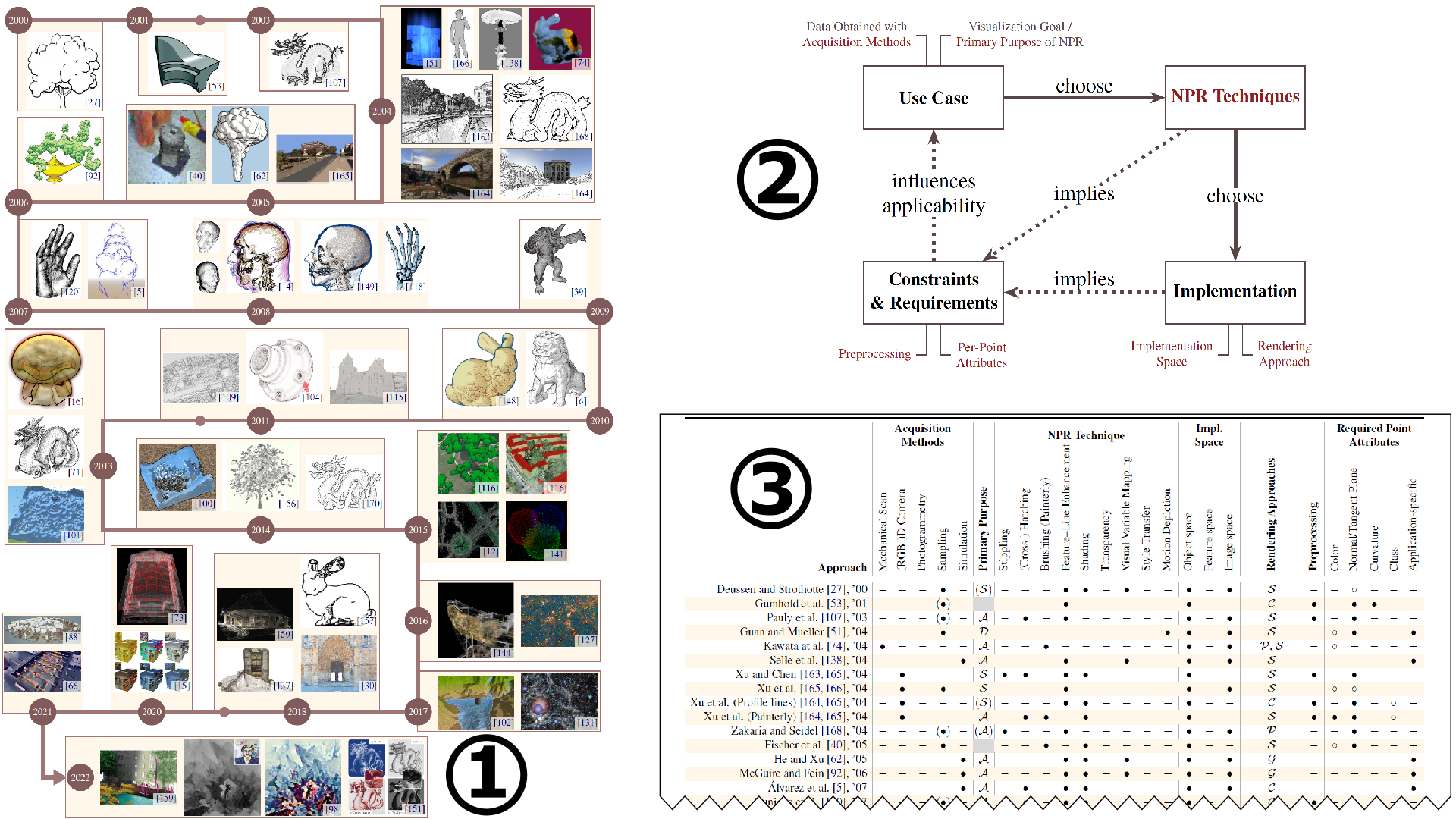

Point clouds are widely used as a versatile representation of 3D entities and scenes for all scale domains and in a variety of application areas, serving as a fundamental data category to directly convey spatial features. However, due to point sparsity, lack of structure, irregular distribution, and acquisition-related inaccuracies, results of point cloudvisualization are often subject to visual complexity and ambiguity. In this regard, non-photorealistic rendering can improve visual communication by reducing the cognitive effort required to understand an image or scene and by directing attention to important features. In the last 20 years, this has been demonstrated by various non-photorealistic rrendering approaches that were proposed to target point clouds specifically. However, they do not use a common language or structure for assessment which complicates comparison and selection. Further, recent developments regarding point cloud characteristics and processing, such as massive data size or web-based rendering are rarelyconsidered. To address these issues, we present a survey on non-photorealistic rendering approaches for point cloud visualization, providing an overview of the current state of research. We derive a structure for the assessment of approaches, proposing seven primary dimensions for the categorization regarding intended goals, data requirements, used techniques, and mode of operation. We then systematically assess corresponding approaches and utilize this classification to identify trends and research gaps, motivating future research in the development of effective non-photorealistic point cloud rendering methods.