Chart2Vec: A Universal Embedding of Context-Aware Visualizations

Qing Chen -

Ying Chen -

Ruishi Zou -

Wei Shuai -

Yi Guo -

Jiazhe Wang -

Nan Cao -

Download preprint PDF

DOI: 10.1109/TVCG.2024.3383089

Room: Bayshore II

2024-10-17T12:54:00ZGMT-0600Change your timezone on the schedule page

2024-10-17T12:54:00Z

Fast forward

Full Video

Keywords

Representation Learning, Multi-view Visualization, Visual Storytelling, Visualization Embedding

Abstract

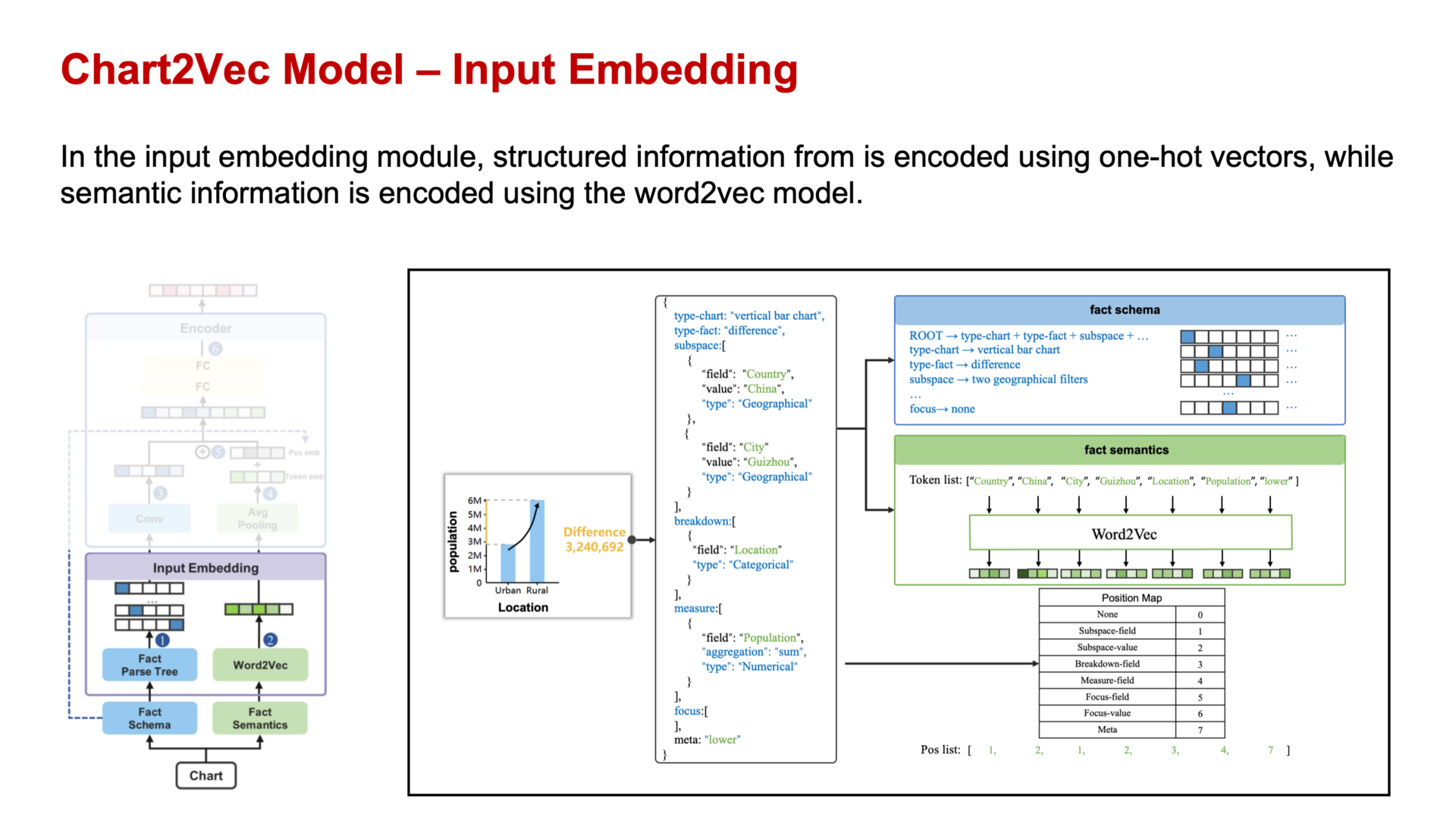

The advances in AI-enabled techniques have accelerated the creation and automation of visualizations in the past decade. However, presenting visualizations in a descriptive and generative format remains a challenge. Moreover, current visualization embedding methods focus on standalone visualizations, neglecting the importance of contextual information for multi-view visualizations. To address this issue, we propose a new representation model, Chart2Vec, to learn a universal embedding of visualizations with context-aware information. Chart2Vec aims to support a wide range of downstream visualization tasks such as recommendation and storytelling. Our model considers both structural and semantic information of visualizations in declarative specifications. To enhance the context-aware capability, Chart2Vec employs multi-task learning on both supervised and unsupervised tasks concerning the cooccurrence of visualizations. We evaluate our method through an ablation study, a user study, and a quantitative comparison. The results verified the consistency of our embedding method with human cognition and showed its advantages over existing methods.