Memory Recall for Data Visualizations in Mixed Reality, Virtual Reality, 3D, and 2D

Christophe Hurter -

Bernice Rogowitz -

Guillaume Truong -

Tiffany Andry -

Hugo Romat -

Ludovic Gardy -

Fereshteh Amini -

Nathalie Henry Riche -

Screen-reader Accessible PDF

DOI: 10.1109/TVCG.2023.3336588

Room: Bayshore II

2024-10-16T16:48:00ZGMT-0600Change your timezone on the schedule page

2024-10-16T16:48:00Z

Fast forward

Full Video

Keywords

Data visualization, Three-dimensional displays, Virtual reality, Mixed reality, Electronic mail, Syntactics, Semantics

Abstract

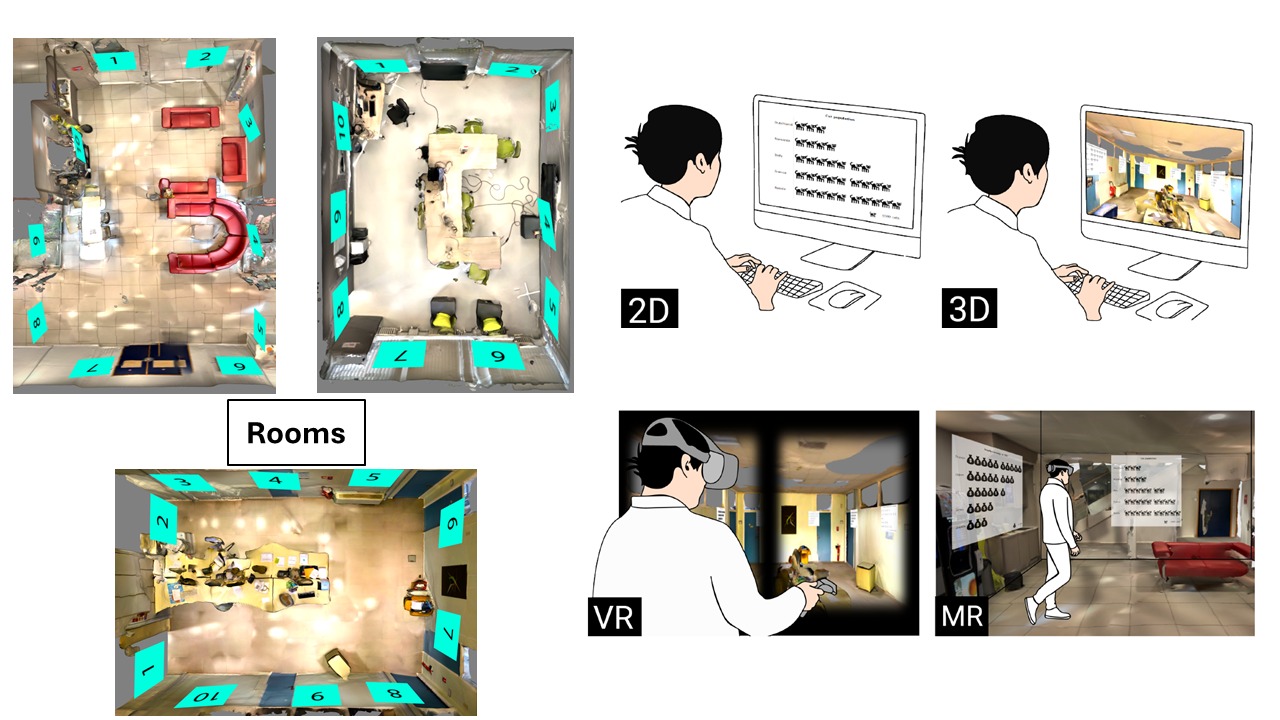

This article explores how the ability to recall information in data visualizations depends on the presentation technology. Participants viewed 10 Isotype visualizations on a 2D screen, in 3D, in Virtual Reality (VR) and in Mixed Reality (MR). To provide a fair comparison between the three 3D conditions, we used LIDAR to capture the details of the physical rooms, and used this information to create our textured 3D models. For all environments, we measured the number of visualizations recalled and their order (2D) or spatial location (3D, VR, MR). We also measured the number of syntactic and semantic features recalled. Results of our study show increased recall and greater richness of data understanding in the MR condition. Not only did participants recall more visualizations and ordinal/spatial positions in MR, but they also remembered more details about graph axes and data mappings, and more information about the shape of the data. We discuss how differences in the spatial and kinesthetic cues provided in these different environments could contribute to these results, and reasons why we did not observe comparable performance in the 3D and VR conditions.