Representing Charts as Text for Language Models: An In-Depth Study of Question Answering for Bar Charts

Victor S. Bursztyn - Adobe Research, San Jose, United States

Jane Hoffswell - Adobe Research, Seattle, United States

Shunan Guo - Adobe Research, San Jose, United States

Eunyee Koh - Adobe Research, San Jose, United States

Screen-reader Accessible PDF

Download Supplemental Material

Room: Bayshore VI

2024-10-17T14:42:00ZGMT-0600Change your timezone on the schedule page

2024-10-17T14:42:00Z

Fast forward

Full Video

Keywords

Machine Learning Techniques; Charts, Diagrams, and Plots; Datasets; Computational Benchmark Studies

Abstract

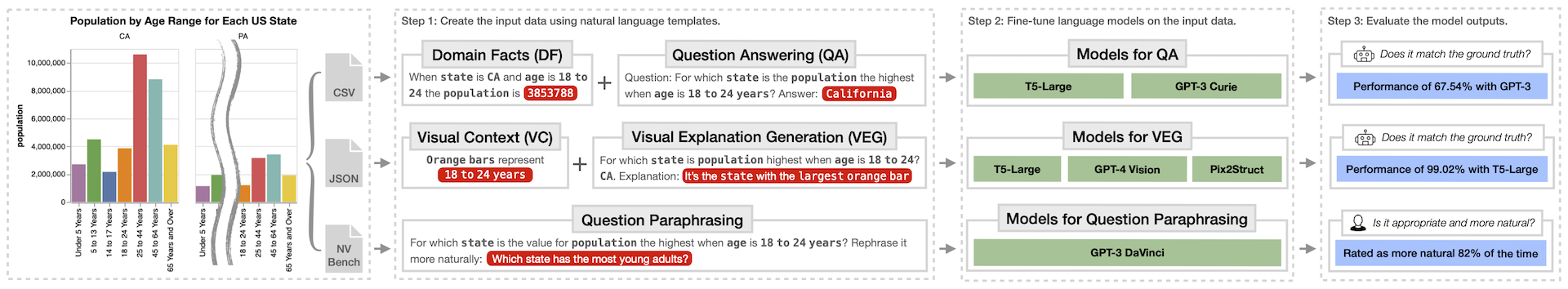

Machine Learning models for chart-grounded Q&A (CQA) often treat charts as images, but performing CQA on pixel values has proven challenging. We thus investigate a resource overlooked by current ML-based approaches: the declarative documents describing how charts should visually encode data (i.e., chart specifications). In this work, we use chart specifications to enhance language models (LMs) for chart-reading tasks, such that the resulting system can robustly understand language for CQA. Through a case study with 359 bar charts, we test novel fine tuning schemes on both GPT-3 and T5 using a new dataset curated for two CQA tasks: question-answering and visual explanation generation. Our text-only approaches strongly outperform vision-based GPT-4 on explanation generation (99% vs. 63% accuracy), and show promising results for question-answering (57-67% accuracy). Through in-depth experiments, we also show that our text-only approaches are mostly robust to natural language variation.