Best Paper Award

Rapid and Precise Topological Comparison with Merge Tree Neural Networks

Yu Qin - Tulane University, New Orleans, United States

Brittany Terese Fasy - Montana State University, Bozeman, United States

Carola Wenk - Tulane University, New Orleans, United States

Brian Summa - Tulane University, New Orleans, United States

Screen-reader Accessible PDF

Download preprint PDF

Room: Bayshore I + II + III

2024-10-15T17:10:00ZGMT-0600Change your timezone on the schedule page

2024-10-15T17:10:00Z

Fast forward

Full Video

Keywords

computational topology, merge trees, graph neural networks

Abstract

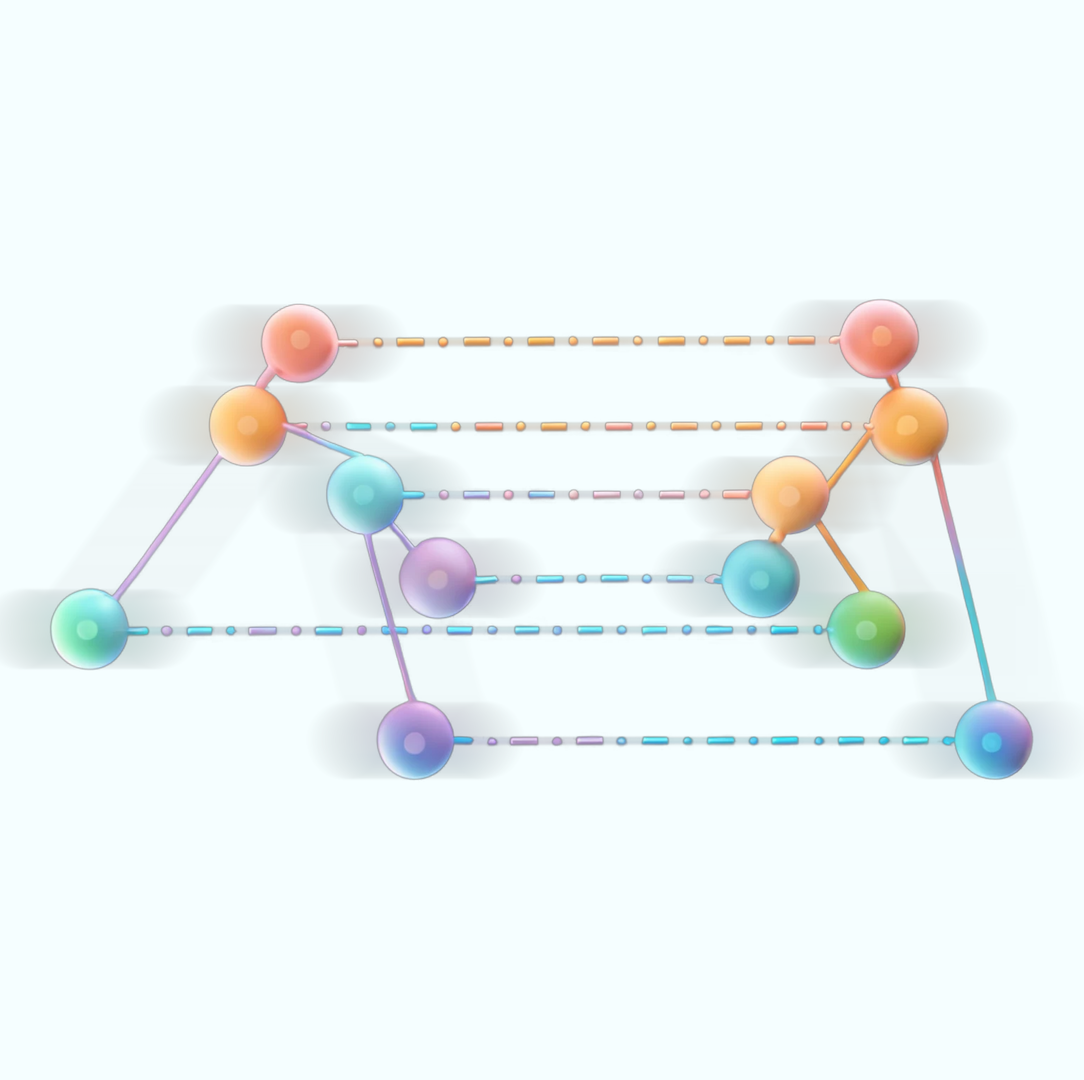

Merge trees are a valuable tool in the scientific visualization of scalar fields; however, current methods for merge tree comparisons are computationally expensive, primarily due to the exhaustive matching between tree nodes. To address this challenge, we introduce the Merge Tree Neural Network (MTNN), a learned neural network model designed for merge tree comparison. The MTNN enables rapid and high-quality similarity computation. We first demonstrate how to train graph neural networks, which emerged as effective encoders for graphs, in order to produce embeddings of merge trees in vector spaces for efficient similarity comparison. Next, we formulate the novel MTNN model that further improves the similarity comparisons by integrating the tree and node embeddings with a new topological attention mechanism. We demonstrate the effectiveness of our model on real-world data in different domains and examine our model’s generalizability across various datasets. Our experimental analysis demonstrates our approach’s superiority in accuracy and efficiency. In particular, we speed up the prior state-of-the-art by more than 100× on the benchmark datasets while maintaining an error rate below 0.1%.