Attention-Aware Visualization: Tracking and Responding to User Perception Over Time

Arvind Srinivasan - Aarhus University, Aarhus, Denmark

Johannes Ellemose - Aarhus University, Aarhus N, Denmark

Peter W. S. Butcher - Bangor University, Bangor, United Kingdom

Panagiotis D. Ritsos - Bangor University, Bangor, United Kingdom

Niklas Elmqvist - Aarhus University, Aarhus, Denmark

Download preprint PDF

Download Supplemental Material

Room: Bayshore II

2024-10-16T17:00:00ZGMT-0600Change your timezone on the schedule page

2024-10-16T17:00:00Z

Fast forward

Full Video

Keywords

Attention tracking, eyetracking, immersive analytics, ubiquitous analytics, post-WIMP interaction

Abstract

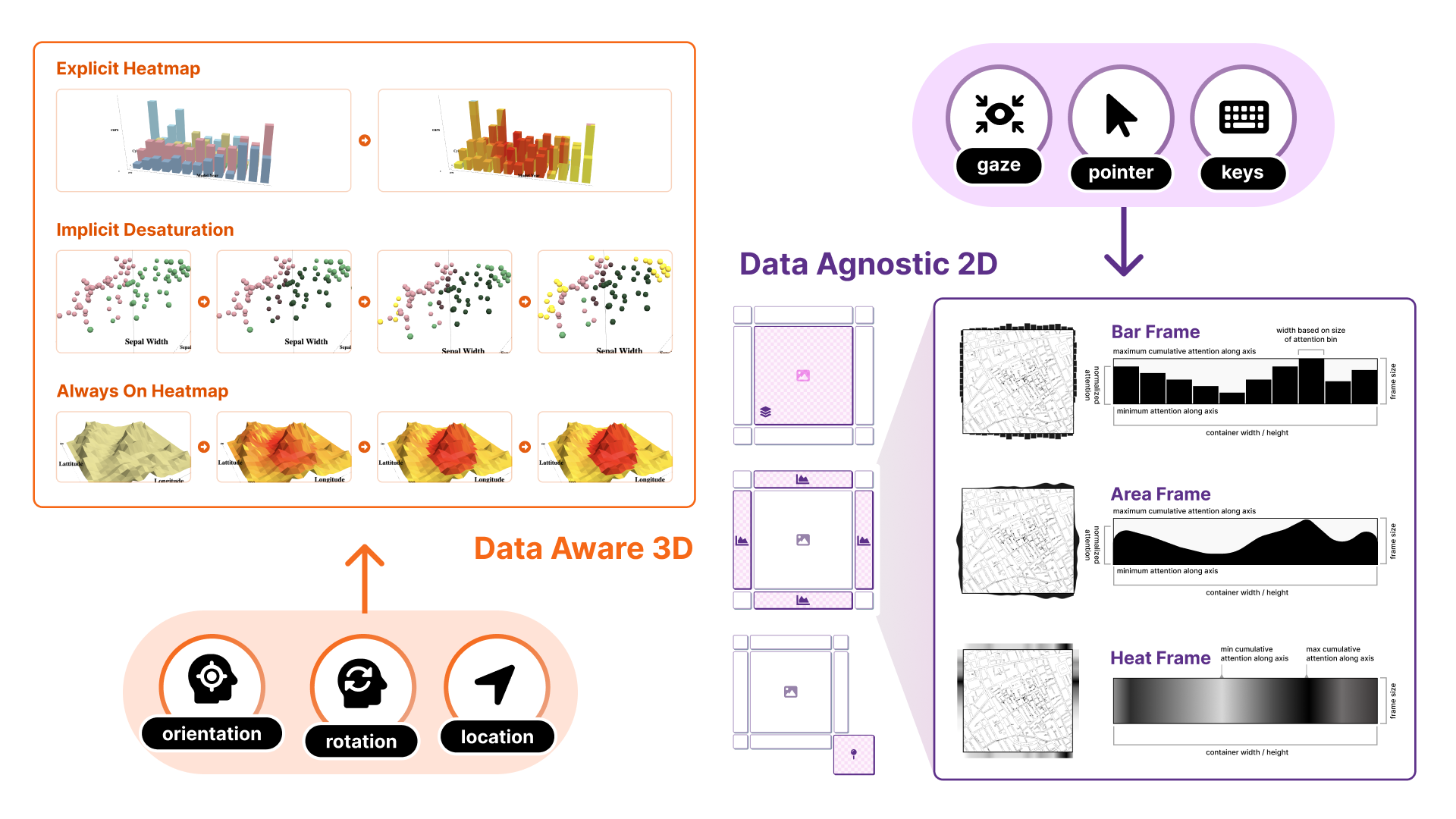

We propose the notion of attention-aware visualizations (AAVs) that track the user’s perception of a visual representation over time and feed this information back to the visualization. Such context awareness is particularly useful for ubiquitous and immersive analytics where knowing which embedded visualizations the user is looking at can be used to make visualizations react appropriately to the user’s attention: for example, by highlighting data the user has not yet seen. We can separate the approach into three components: (1) measuring the user’s gaze on a visualization and its parts; (2) tracking the user’s attention over time; and (3) reactively modifying the visual representation based on the current attention metric. In this paper, we present two separate implementations of AAV: a 2D data-agnostic method for web-based visualizations that can use an embodied eyetracker to capture the user’s gaze, and a 3D data-aware one that uses the stencil buffer to track the visibility of each individual mark in a visualization. Both methods provide similar mechanisms for accumulating attention over time and changing the appearance of marks in response. We also present results from a qualitative evaluation studying visual feedback and triggering mechanisms for capturing and revisualizing attention.