Honorable Mention

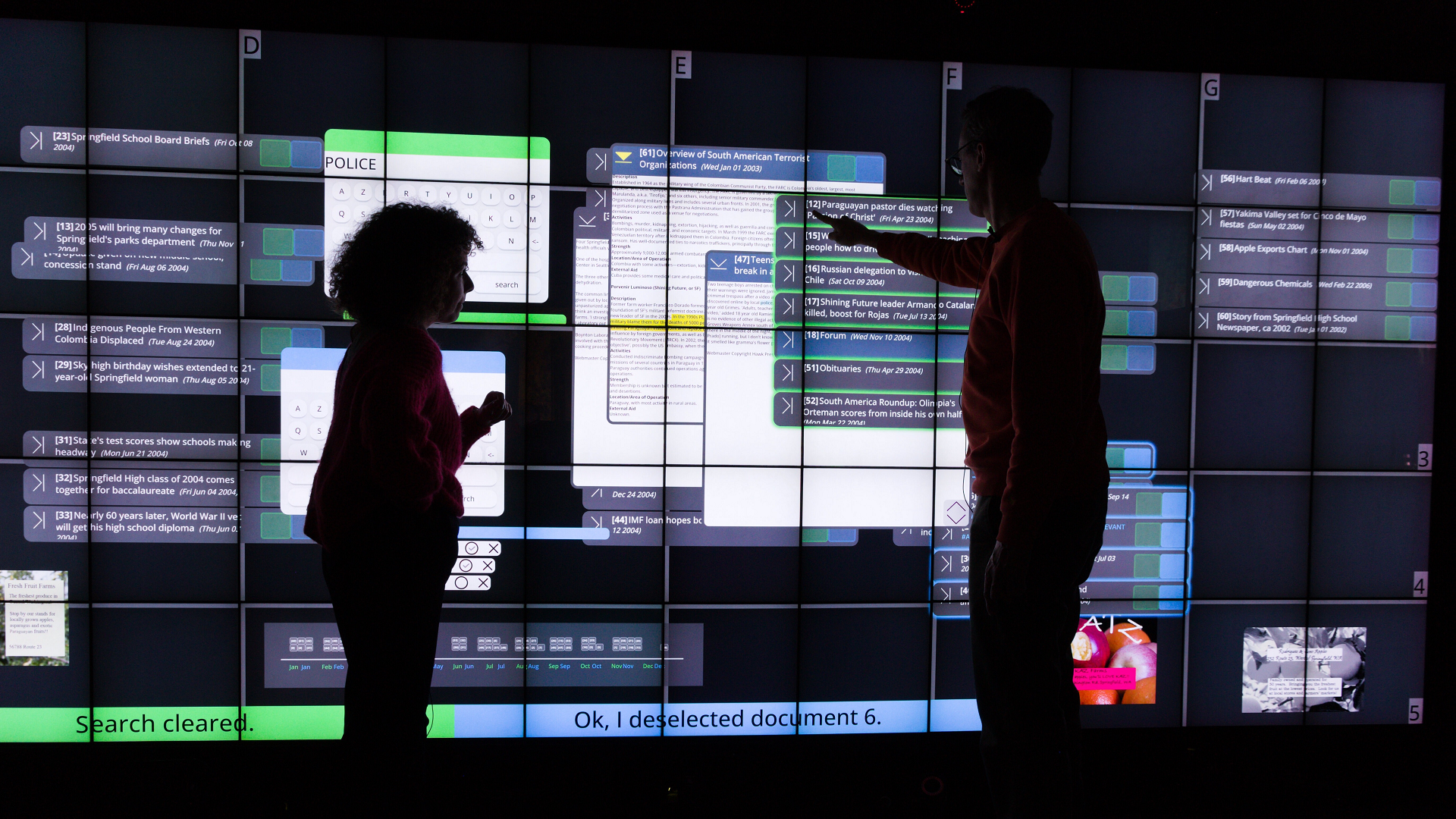

Talk to the Wall: The Role of Speech Interaction in Collaborative Visual Analytics

Gabriela Molina León - University of Bremen, Bremen, Germany. University of Bremen, Bremen, Germany

Anastasia Bezerianos - LISN, Université Paris-Saclay, CNRS, INRIA, Orsay, France

Olivier Gladin - Inria, Palaiseau, France

Petra Isenberg - Université Paris-Saclay, CNRS, Orsay, France. Inria, Saclay, France

Screen-reader Accessible PDF

Download preprint PDF

Download Supplemental Material

Room: Bayshore V

2024-10-16T17:00:00ZGMT-0600Change your timezone on the schedule page

2024-10-16T17:00:00Z

Fast forward

Full Video

Keywords

Speech interaction, wall display, collaborative sensemaking, multimodal interaction, collaboration styles

Abstract

We present the results of an exploratory study on how pairs interact with speech commands and touch gestures on a wall-sized display during a collaborative sensemaking task. Previous work has shown that speech commands, alone or in combination with other input modalities, can support visual data exploration by individuals. However, it is still unknown whether and how speech commands can be used in collaboration, and for what tasks. To answer these questions, we developed a functioning prototype that we used as a technology probe. We conducted an in-depth exploratory study with 10 participant pairs to analyze their interaction choices, the interplay between the input modalities, and their collaboration. While touch was the most used modality, we found that participants preferred speech commands for global operations, used them for distant interaction, and that speech interaction contributed to the awareness of the partner’s actions. Furthermore, the likelihood of using speech commands during collaboration was related to the personality trait of agreeableness. Regarding collaboration styles, participants interacted with speech equally often whether they were in loosely or closely coupled collaboration. While the partners stood closer to each other during close collaboration, they did not distance themselves to use speech commands. From our findings, we derive and contribute a set of design considerations for collaborative and multimodal interactive data analysis systems. All supplemental materials are available at https://osf.io/8gpv2.